Kubernetes architecture

photo by Maximilian Weisbecker

photo by Maximilian Weisbecker

The rol of KubernetesPermalink

Although there is a lot of posts and documentation about Kubernetes’s architecture, I wanted to post my own take on the topic, so that’s why I am writing this article.

Kubernetes (K8s) is an open-source Container Orchestration platform that automates many of the processes related to deploying, managing and scaling containerized applications.

As the virtualization technologies it has been evolving from deploying directly on bare metal servers to deploying on fully virtualized hardware, the needs have evolved as well.

When organizations were running applications directly on bare-metal servers, there were no way to define resource boundaries for applications in a physical server, and this caused resource allocation issues. There were situations in which one application took up most of the resources making the other applications underperformed. As a solution, the full-virtualization was introduced, allowing to run multiple virtual machines (VMs) on a single physical server and, at the same time, it provides isolation between the applications and higher levels of security. Virtualization allows better utilization of resources and greater scalability, presenting a set of physical resources as a cluster of disposable virtual machines.

As we see in the post comparing VMs and Containers, VMs are slower and heavier than containers, since they have a full operating system installed inside them. Containers have relaxed isolation properties to share OS among applications, but they have their own filesystem, share of CPU, memory, process space, etc. As they are decoupled from the underlying infrastructure, they are portable across clouds and OS distributions.

Containers are a good way to bundle and run applications, specially on the cloud, but in a production environments we need something that can manage those containers and ensure that the service is always on-line. Clusters have been around for years and, cluster management software as well, but in the case of managing such amount of individual services (inside its own container), replicas, etc. it is even more needed. Kubernetes provides you with a framework to run distributed systems resiliently. It takes care of scaling and failover for your application, provides deployment patterns, etc..

Kubernetes cluster and its APIPermalink

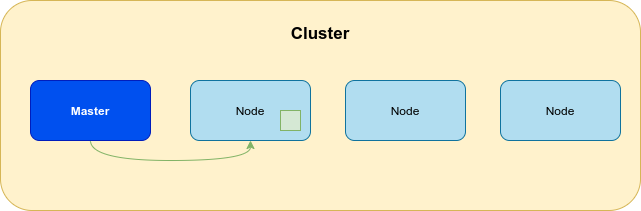

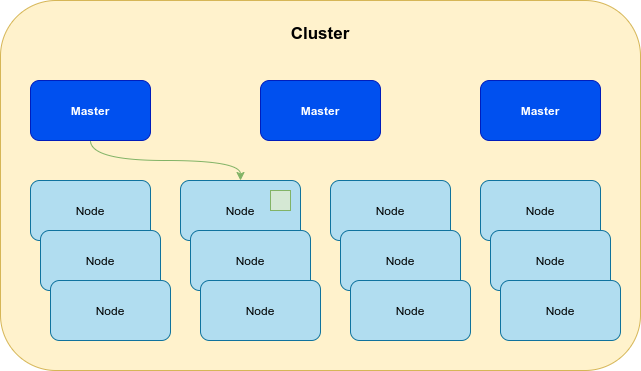

A working Kubernetes deployment is called a cluster. A Kubernetes cluster can be visualized as two parts: the Control Plane (Master) and the Compute Machines (nodes). Nodes are typically virtual machines.

Cluster

Cluster

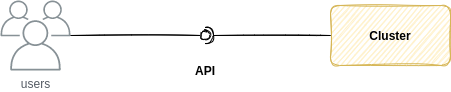

One of the strengths of Kubernetes is its ability to offer a complete management interface through a very well defined API which abstracts the users of knowing the internals of the cluster and, at the same time, eases the integration of 3rd party tools and systems. That API is based on RESTful, so it can be accessed both on local networks and on public clouds that offer managed versions of K8s.

Cluster API

Cluster API

K8s transforms virtual and physical machines into a unified API surface. A developer can then use the Kubernetes API to deploy, scale, and manage containerized applications.

The Kubernetes API allows to define the resources and the state of both the cluster and the applications deployed on it by using Kubernetes Objects; these persistent entities are stored on the Master and can describe:

- What containerized applications are running (and on which nodes)

- The resources available to those applications

- The policies around how those applications behave, such as restart policies, upgrades, and fault-tolerance

A Kubernetes Object is a “record of intent”, which means that once you create the object, Kubernetes will constantly work to ensure that object exists. By creating an object, you’re effectively telling the system what you want your cluster’s workload to look like; this is your cluster’s desired state.

Developers can define those Kubernetes objects by using YAML files as the following:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

strategy:

type: Recreate

selector:

matchLabels:

app: nginx

replicas: 1

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

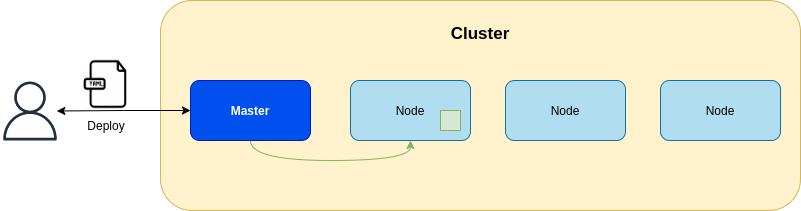

Those YAML files are translated by the CLI (kubectl) into REST calls to the API and the Master stores all resources defined.

Deploying on Kubernetes

Deploying on Kubernetes

The Master then starts to create all the services, processes, etc. on the cluster to meet what is specified on that deployment file and, as before, it will coordinate all the cluster’s component to meet and keep the desired state.

Workload managementPermalink

Kubernetes manages jobs (workloads) and those jobs run containers on nodes. The Master schedules jobs on nodes based on load. The picture above is a very simple configuration in which there is only one Master and three nodes, but a production deployment can involve thousands of nodes and multiple masters.

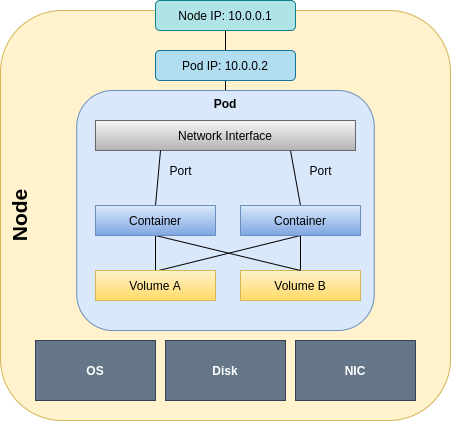

Those jobs, in Kubernetes terminology, are called Pods. The Pod is the basic unit of deployments for containers.Pods can run multiple containers that share networking and storage separated from the underlying node.

As we can see, underneath a Pod we have the Node’s hardware, operating system and Network Interface Controller (NIC). Every Node gets its own IP.

Inside the Node there can be a Pod (several Pods can be deployed on a node depending on its capacity). Inside a Pod, we have containers and their network interfaces for the containers. As described here, the Pod gets its own network namespace with a unique cluster IP (Pod IP) and set of ports. Containers in the same Pod can communicate with each other using localhost. Data is stored in volumes that reside in memory or persistent disks. This is analogous to a VM and it is highly-portable because Pods can be moved to other nodes without re-configuring or rebuilding anything.

It is very common to run only one container per Pod unless they have a hard dependency like a side-car application for logging or file updating.

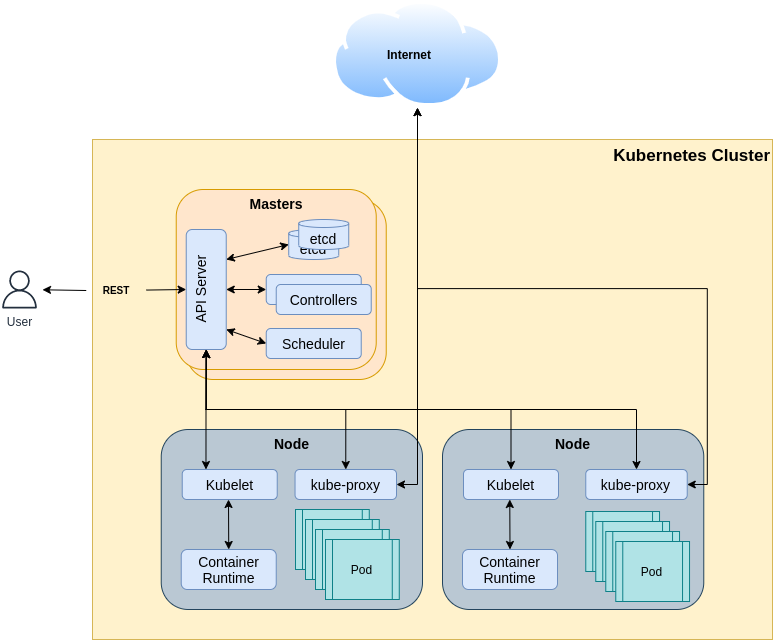

Kubernetes architecture internalsPermalink

As we say, K8s’ architecture provides a flexible framework for distributed systems. K8s automatically orchestrates scaling and failovers for your applications and provides deployment patterns. It helps manage containers that run the applications and ensures there is no downtime in a production environment. For example, if a container goes down, another container automatically takes its place without the end-user ever noticing. All that is orchestrated by the the Master Nodes distributing the workload on the cluster’s nodes, using Pods, it is time to deep dive a little more to see the Kubernetes’ components that make this possible. In the following picture we can see those components:

-

Master nodes: they provide the control plane for the custer. As we saw, there must be more than one Master on each cluster, but all of them have the following components:

-

API Server: it is the front-end of the control plane and the only component that the users can interact with directly. It exposes the Kubernetes API through a RESTful interface. The users can interact with the API via command tools like kubectl, using GUIs like the K8s Dashboard or even using

curldirectly. -

ectd: etcd is a strongly consistent, distributed key-value store that provides a reliable way to store data that needs to be accessed by a distributed system or cluster of machines. It is the database used by the Master to back-up all cluster data. It stores the entire configuration and state of the cluster in the form of (Kubernetes Objects). The Master queries etcd to retrieve parameters for the state of the nodes, pods, and containers.

-

Scheduler: it is responsible for assigning your application to node. It automatically detects which pod to place on which node based on resource requirements, hardware constraints and other factors. It will smartly find out the optimum node which fulfills the requirements to run the application.

-

Control Manager: it is a daemon that embeds the core control loops shipped with Kubernetes. In applications of robotics and automation, a control loop is a non-terminating loop that regulates the state of the system. In Kubernetes, a controller is a control loop that watches the shared state of the cluster through the API Server and makes changes attempting to move the current state towards the desired state. Kubernetes comes with a set of built-in controllers that run inside the kube-controller-manager. These built-in controllers provide important core behaviors: replication controller, endpoints controller, namespace controller and service-accounts controller. We can find controllers that run even outside the control plane, to extend Kubernetes, and new controllers can be written using its SDK [1].

-

-

Worker nodes:

-

Kubelet: it is the principal Kubernetes agent deployed on every node within the cluster. It monitors node’s CPU, RAM and storage and keep the Master informed. It watches for tasks sent from the API Server, executes the task, and reports back to the Master. It also monitors Pods and reports back to the control panel if a Pod is not fully functional. Based on that information, the Master can then decide how to allocate tasks and resources to reach the desired state. The Kubelet works in terms of a PodSpec, which is the YAML/JSON file shown before that describes a Kubernetes Object that define a Pod. Once the PodSpec are parsed, the Kubelet communicates with the Container runtime, which starts pulling the container images (if they are not on the node’s cache) and the instantiating the containers.

-

Kube-proxy: it makes sure that each node gets its IP address, implements local iptables and rules to handle routing. It load balances traffic between application components. It is also called as service proxy which runs on each node in the Kubernetes cluster. It will constantly look for new services and appropriately create the rules on each node to forward traffic to services to the back-end pods respectively.

-

Container runtime: the Container runtime is the component responsible for running (and managing) containers. When the Kubernetes project started, the only container runtime supported was Docker, but as the project evolved, the community introduced support for other runtimes as containerd or CRI-O, even the very monolithic nature of Docker was split into the pure runtime runc and other more peripheral functionalities as those in charge of pulling and managing container images.

-