Mitigation strategies to Docker Hub restrictions

It’s been quite a long time (2nd of November 2020) since Docker changed its policy [1] to download images from its free container registry Docker Hub [2] where many companies and open source projects host their images. Docker now has a limit of 100 downloads every 6 hours from a single IP address for anonymous users, so if you or your team reach that limit, you’ll get the following message:

ERROR: toomanyrequests: Too Many Requests.

or

You have reached your pull rate limit. You may increase the limit by

authenticating and upgrading: https://www.docker.com/increase-rate-limits

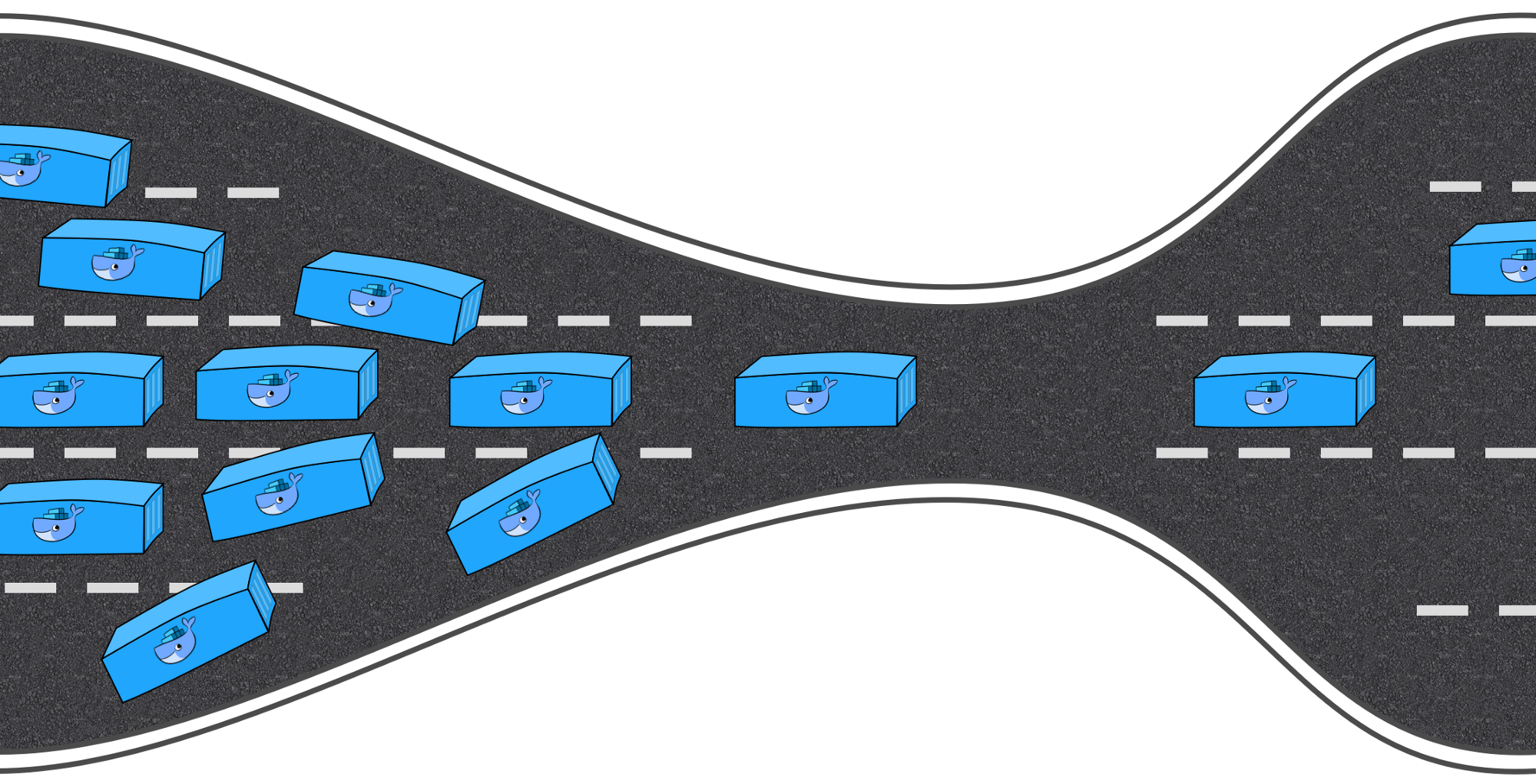

A hundred downloads in six hours from the same IP may sound like a lot, specially for individuals, but it is not for organizations, where all computers access the Internet through a NAT [3] and therefore, everyone shares a limited pool of IPs. The case is even worse when it comes to CI/CD systems that can run hundreds of pipelines within six hours, most of them downloading images from Docker Hub. Container platforms, like Kubernetes and OpenShift might run into these limits as well, when trying to scale or re-schedule a deployment from those images, even when the nodes have the image cached. These events occur constantly in any container orchestration environment and are very likely to rapidly exhaust the quota of 100/200 pulls in 6 hours, which might cause a service outage.

Once the problem is stated I’ll list some of the strategies that can mitigate it, since some of them are just good practices that we all should have implemented in our CI/CD systems and local development environments.

Use a non-anonymous userPermalink

The first one is the easiest, just register on Docker Hub with your credentials, since those rate limits double for non-anonymous users, so you can get 200 downloads every 6 hours; we can do the same on our CI/CD systems by creating a secret with those credentials to be used by Jenkins [4] or any other automation server we might use.

Upgrade to Pro or Team subscriptionsPermalink

For just a few dollars a month you can get unlimited container requests and other interesting features, like image vulnerability scans, etc. This solutions can be optional for individuals, but a must for organizations, since the CI/CD systems can just use a limited numbers of credentials, so it is not necessary that all developers have their own.

Import public images in your private repositoryPermalink

This, as well as the following strategies, can be applied not only to this case, but also to many others scenarios, since they are in fact good practices within the DevOps area.

If your container images depends on public images it is good to import those into your private registry so that there’s no need to go to the Internet to fetch them, this avoid both the risk of someone unpublishing that image and the waste of bandwidth, besides it reduces the number of container image requests.

Configure your Container Registry to perform as a proxyPermalink

Importing all the public container images used by a company into its private repository can be a hard task and sometimes it eventually force to restrict the number of images that can be used to the approved ones. If you do not want to restrict the base images, the strategy should be to implement a mirror/proxy strategy.

Package Registries performing as proxies is a quite common configuration pattern for NPM, or Maven registries [8], they both can mirror the central repositories so that every time your request cannot be satisfied they can proxy it and fetch the package, becoming a de-facto cache for packages. Unfortunately, this feature is not so common in Container Registries, as for example, Azure Container Registry (ACR) [5], Quay [6] or Amazon ECR. The Google Container Registry (GCR) [7] does implements this functionality.

Cache your images whenever is possiblePermalink

Whether you just use Docker, LXC or Kubernetes, there is always a mechanism to cache the base images or the tiers so that your CI/CD system does not need to hit DockerHub always.

Configure your Docker Daemon to use GCR’s cachePermalink

As described here [7], the Google Container Registry caches frequently-accessed

public Docker Hub images on mirror.gcr.io, so the Docker Daemon can be configured

to use a cached public image if one is available, or pull the image from Docker

Hub if a cached copy is unavailable.

Add the following configuration to the /etc/docker/daemon.json:

{

"registry-mirrors": ["https://mirror.gcr.io"]

}

Restart the daemon:

sudo service docker restart